The fundamental problem of causal analysis

25 Aug 2016

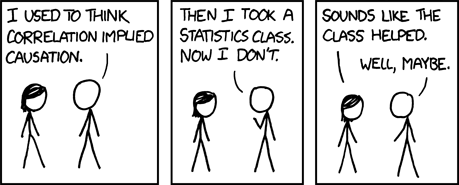

“Correlation does not imply causation” is one of those principles every person that works with data should know. It is one of the first concepts taught in any introduction to statistics class. There is a good reason for this, as most of the work of a data scientist, or a statistician, does actually revolve around questions of causation:

- Did customers buy into product X or service Y because of last weeks email campaign, or would they have converted regardless of whether we did or did not run the campaign?

- Was there any effect of in-store promotion Z on the spending behavior of customers four weeks after the promotion?

- Did people with disease X got better because they took treatment Y, or would they have gotten better anyways?

Being able to distinguish between spurious correlations, and true causal effects, means a data scientist can truly add value to the company.

This is where traditional statistics, like experimental design, comes into play. Although it is perhaps not commonly associated with the field of data science, more and more data scientists are using principles from experimental design. Data scientists at Twitter use these principles to correct for hidden bias in their A/B tests, engineers from Google have developed a whole R package1 around causal analysis, and at Tesco we use these principles to attribute changes in customer spending behavior to promotions customers participated in.

In this post we will have a look at some of the frequently used methods in causal analysis. First, we will go through a little bit of theory, and talk about why we need causal analysis in the first place (the fundamental problem of causal analysis). I will then introduce you to propensity score matching methods, which are one way of dealing with observational data sets. We will wrap up with a discussion about other methods, and I have also put up an IPython notebook that walks you through an example data set.

-

Brodersen, K. H., Gallusser, F., Koehler, J., Remy, N., & Scott, S. L. 2015. Inferring causal impact using Bayesian structural time-series models. The Annals of Applied Statistics, 9(1), 247-274. https://static.googleusercontent.com/media/research.google.com/en//pubs/archive/41854.pdf ↩